Big Tech criticized for illegally using copyrighted books for AI model training, according to Senator's accusation

In recent legal proceedings, the use of copyrighted materials by AI companies, such as Meta Platforms and Anthropic, for training large language models (LLMs) has come under close examination. This issue revolves around the question of whether these tech giants are overstepping copyright laws by sourcing vast amounts of data from unlicensed "shadow libraries."

The most recent developments in this ongoing saga include the Kadrey v. Meta Platforms, Inc. and Bartz v. Anthropic cases. In the Meta case, U.S. District Judge Vince Chhabria ruled that the company's use of copyrighted works for AI training could potentially be considered fair use under U.S. copyright law. However, Judge Chhabria emphasized that copyright holders could potentially defeat a fair use defense by demonstrating market harm. In the Anthropic case, U.S. District Judge William Alsup partially sided with authors Andrea Bartz, Charles Graeber, and Kirk Wallace Johnson, stating that Anthropic's copying and storage of more than 7 million pirated books in a "central library" infringed the authors' copyrights and was not fair use. A trial has been scheduled for December to determine how much Anthropic owes for the infringement.

These decisions have significant implications for both AI companies and copyright law. For AI companies, they suggest that fair use may be a viable defense, particularly if the AI output does not infringe on the original works. However, the use of pirated content increases the risk of infringement liability. The ability to demonstrate market harm will be crucial in future cases, as AI companies must be prepared to address this aspect in their fair use arguments.

For copyright law, the integration of AI into the fair use doctrine is evolving. Courts are acknowledging the need for fact-specific analysis, meaning each case will be evaluated on its own merits, taking into account the specifics of AI technology and its impacts. Future legislation and guidance may be necessary to clarify the application of fair use in AI contexts, especially as more industries become involved.

Copyright holders may need to implement strategies like "no train" clauses in licenses to protect their works from unauthorized use in AI training. AI companies argue that their systems make fair use of copyrighted material to create new, transformative content, and that being forced to pay copyright holders for their work could hamstring the booming AI industry.

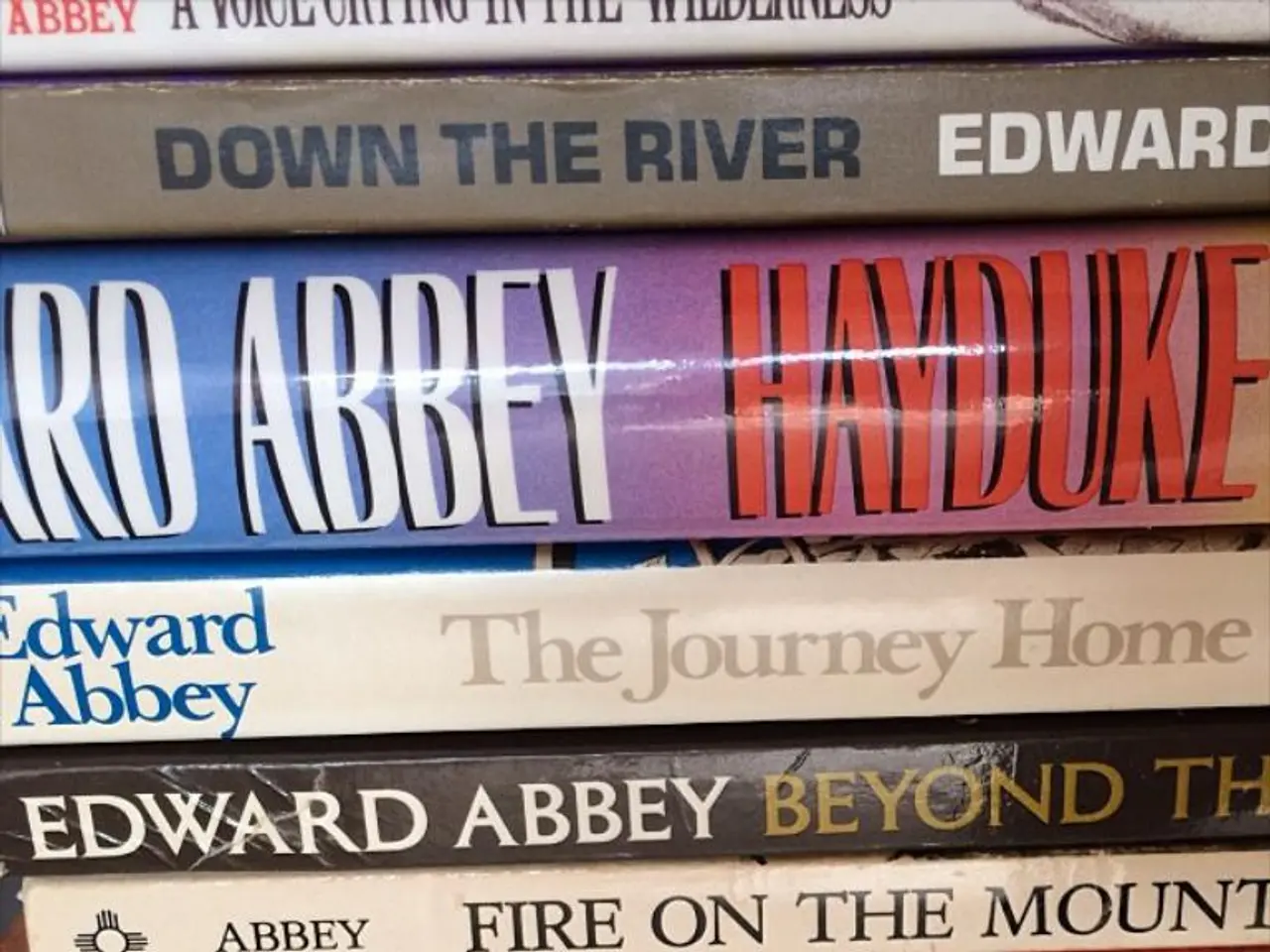

Notably, Maxwell Pritt, representing authors in legal cases, claimed that Meta had torrented over 200 terabytes of copyrighted material from multiple "illicit criminal enterprises." Sen. Josh Hawley, R-Mo., questioned Meta over allegations of pirating authors' works without payment for AI development during a hearing.

As the AI industry continues to grow and evolve, the question of how to balance the need for vast amounts of data for training AI systems with the rights of copyright holders remains a complex and contentious issue. The ongoing legal battles between AI companies and copyright holders will undoubtedly shape the future of AI development and the broader copyright landscape.

- The rulings in the Kadrey v. Meta Platforms, Inc. and Bartz v. Anthropic cases have substantial implications for the future of AI companies, as they suggest that fair use might be a defensive strategy, but the usage of pirated content increases the risk of infringement liability.

- The evolving integration of AI into the fair use doctrine necessitates fact-specific analysis, with each case being evaluated individually, taking into account the unique aspects of AI technology and its effects.

- In response to potential unauthorized use in AI training, copyright holders are considering implementing "no train" clauses in their licenses to safeguard their works.

- Beyond the AI industry, ongoing legal disputes between AI companies and copyright holders are poised to influence the development of AI and the broader copyright landscape, creating complex and contentious issues that need to be addressed.