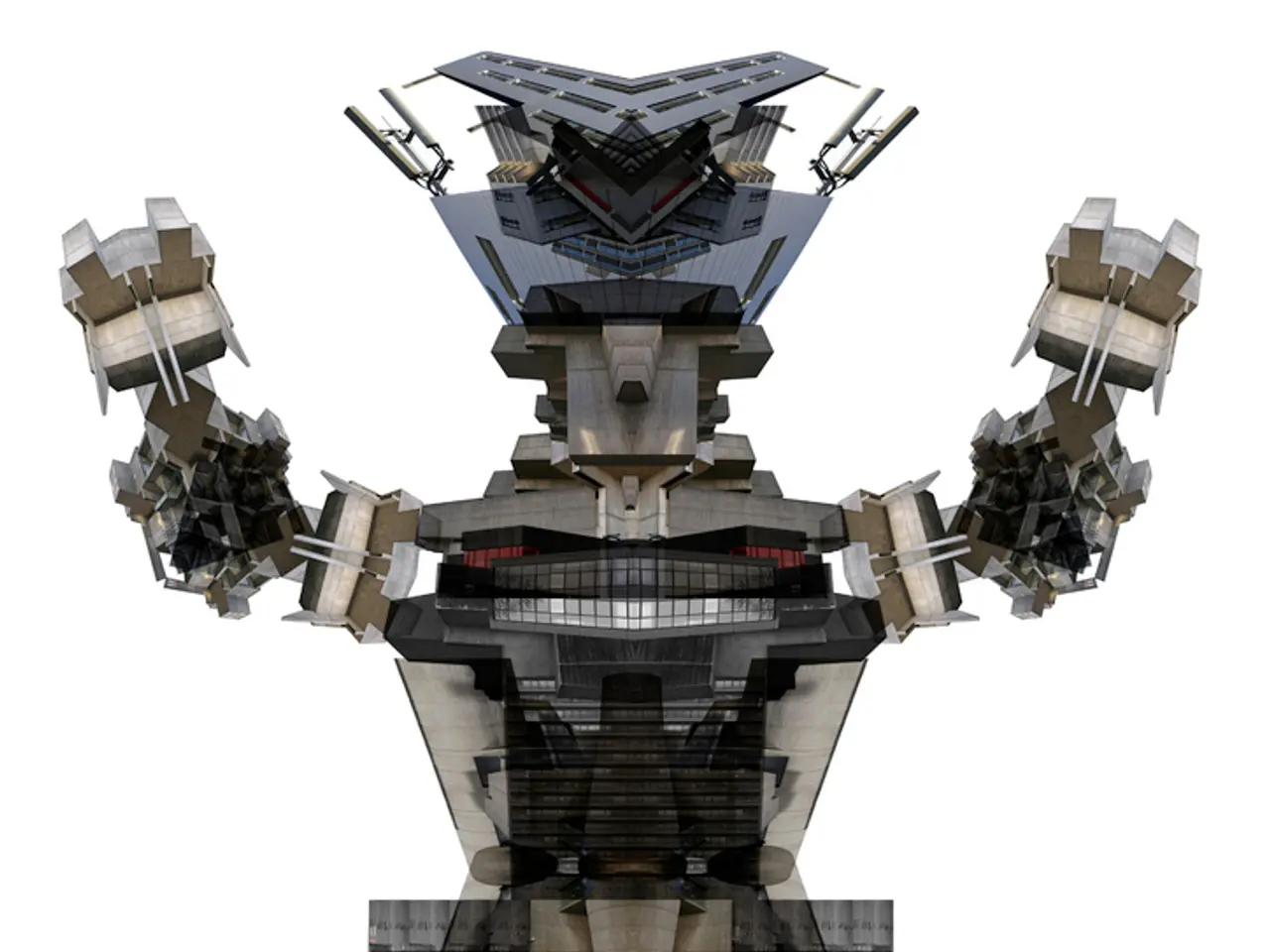

Robotics'Autonomy-Computation Bond

In the world of robotics, progress has been uneven, with each advancement in autonomy demanding exponential increases in power. This reality is highlighted by the curve of computing power versus autonomy performance, where locomotion can be achieved with ~50W embedded CPUs, dexterity requires ~200W, and autonomy demands 700W+.

The pursuit of robotic autonomy faces several challenges, notably the limitations of mobile platforms. High-capacity batteries cannot sustain 700W draw for long durations, and heat dissipation is a critical barrier. The 700W barrier, in particular, is a critical challenge in achieving autonomy, where brute force yields diminishing returns.

Current AI-driven robotics relies on brute-force hardware, including cutting-edge GPUs, thermal management systems, and power systems. However, today's AI systems require 700W+ of GPU power to achieve limited autonomy, struggle with inference speed, memory bandwidth, and thermal constraints, and deliver inferior performance despite consuming 35x more power than the human brain.

The efficiency gap between humans and machines is a significant concern. Machines burn enormous energy while failing to match the adaptive intelligence of humans. The compute-autonomy relationship reframes the robotics challenge as building systems that can think within power limits.

To overcome these challenges, the field must move beyond brute-force GPUs towards novel architectures. Neuromorphic chips with ultra-low power design and spiking neural networks are proposed, along with quantum-classical hybrids and photonic computing for orders-of-magnitude improvements in efficiency.

One promising solution is cognitive-robotic architectures like HARMONIC, which integrate robotic control with cognitive supervision to enable transparent, verifiable reasoning, incremental knowledge updating, and faster inference rates than conventional AI models. Future hardware requirements emphasize parallel computing architectures that support real-time reasoning and whole-body control for complex tasks, often leveraging combined policies for locomotion and manipulation.

Integration of quantized large language models (LLMs) with vision-language pretraining enhances robot perception, navigation, and multimodal decision-making, requiring hardware capable of supporting these advanced AI workloads locally and efficiently.

The ultimate goal is human-level efficiency: 20W general intelligence. Revolutionary breakthroughs, not incremental upgrades, are required to break free of the compute-autonomy bottleneck and achieve this goal. The future of robotics depends on closing the 35x efficiency gap between human brains and machines.

Read also:

- Exploring Harry Potter's Lineage: Decoding the Enigma of His Half-Blood Ancestry

- Elon Musk Acquires 26,400 Megawatt Gas Turbines for Powering His AI Project, Overlooks Necessary Permits for Operation!

- Munich Focuses on Digital Transformation Amid East-West Competition

- US President Trump and UK Labour leader Starmer agree to strengthen trade and technology partnerships between the United States and the United Kingdom.